Many Australian workers admit they present AI-generated content as their own

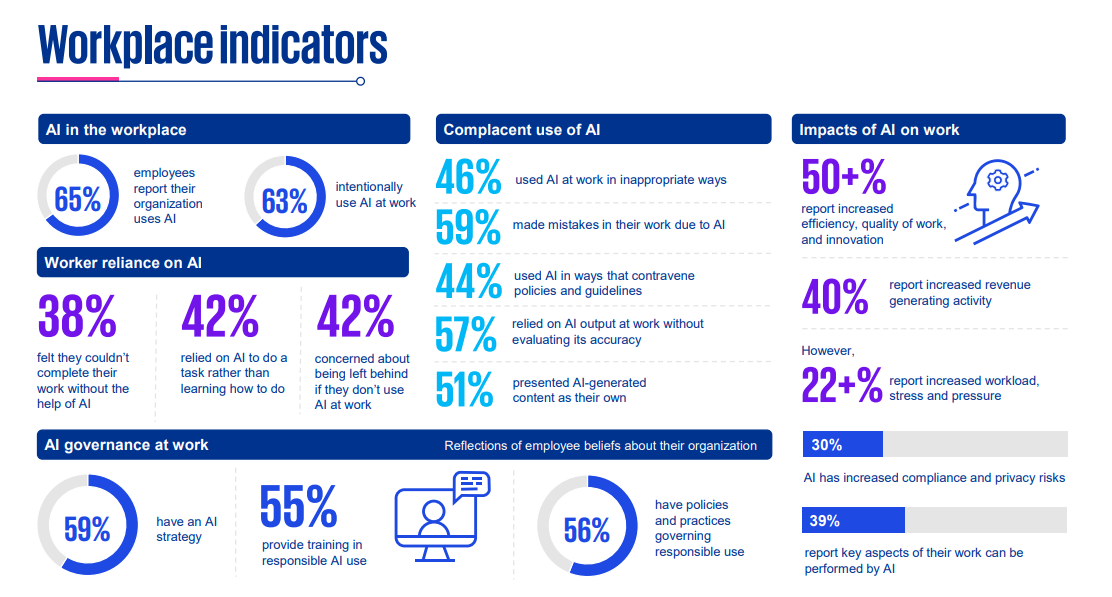

Many Australians workers admit they present AI-generated content as their own and 46% say they use the technology at work in inapproprote ways, according to a comprehensive new global study on trust in artificial intelligence.

The Trust in Artificial Intelligence Global Insights, released by KPMG and carried out by Univeristy of Melbourne, found 51 per cent of Australians (compared to 57% globally) were not transparent about their use of AI or avoided revealing their use of AI to complete work while 44% admited to using AI in ways that contravened policies and guidelines.

It warned many organisations are rolling out AI without ensuring proper oversight or AI literacy, creating a "complex risk environment" and that Australians want to see better regulation and governance.

The study surveyed more than 48,000 people across 47 countries, Including Australia, between November 2024 and January 2025 and found trust remains an issue, with 54% gobally "wary" about trusting AI.

“Psychological safety around the use of AI in work is critical. People need to feel comfortable to openly share and experiment with how they are using AI in their work and learn from others for greater transparency and accountability," Professor Nicole Gillespie, who led the study, said.

HRD spoke to Dr David Tuffley, Senior Lecturer at Griffith University’s School of Information and Communication Technology, who said it’s a dangerous game to play.

“It massively detracts from the transparency and accountability of organisations – especially considering AI is far from perfect. It’s an inference engine, it can hallucinate, it can make things up,” Dr Tuffley said. “It’s not a lie, per se – they’re just making the most logical inference at the information you have. Not having some sort of governance over the whole thing can only harm you down the road.”

“I see many students try – and mostly fail – to cover up their use of AI. I think, now, a lot of people are using it in a naïve or blasé manner because, if truth be told, we don’t know enough about it yet,” he added.

The table below shows the Australian findings from the report.

Tuffley used the German expression ‘Sturm und Trung’ – meaning the ‘stresses and strains’ – to comment on AI’s current placement, and where it will end up in the future.

“It relates to the dynamic tensions on both sides of an argument – which eventually sees you end up in the middle. Not using it to its full capability is a waste, and putting an overreliance in it is also a dangerous game. Now we’ve got this quite polarized scenario of ‘one or the other,’ which I think is why a lot of people are getting it wrong,” he told HRD.

With AI evolving at a rapid rate, Tuffley observed that this could be a reason for misuses of technology.

“It blows my mind every day – I spend a lot of time keeping on top of things and it’s developing at such an exceptional rate that I don’t think anyone can get a good enough grasp on what it can and can’t do in order to make a decision on what’s right and wrong.”

“AI is a fantastic tool – and it’s just that, a tool. I think people are either using it too much or not enough, which means we don’t really know exactly where we sit. But, like I’ve said before, we’ll end up in the middle soon,” Tuffley jovially added.

In addition to misuses of artificial intelligence in the workplace, stats also show more Australians want to see better regulation and governance over its use.

Australians expect international laws and regulation (76%), as well as oversight by the government and existing regulators (80%) and co-regulation with industry (77%). However, only 30% believe current laws, regulation and safeguards are adequate to make AI use safe, the report states.

Tuffley noted that technologies such as this take time to establish norms – describing when the internet first become popular as “the Wild West.”

“When things like this burst onto the scene, it takes a while to calm things down. It’s like when the lawmen first came to the cowboys – slowly but surely, lawless territories become lawful. It’s the same with AI. We just need time.”

“You need to generate policy from the bottom up and the top down. Governments and legislative bodies will have their say on the right or wrong – so will the people using the tech daily. We need to implicitly understand AI’s usage before we can jump to any conclusions,” Tuffley added.

He concluded by telling HRD that AI is currently being implemented in efficient ways – but we have to wait until the rate of change slows down before universal guardrails are put in place.

The report also found Australians are less trusting about AI than most countries, and as well as being wary of AI, Australia ranks among the lowest globally on acceptance, excitement and adoption – alongside New Zealand and the Netherlands.

“The public’s trust of AI technologies and their safe and secure use is central to acceptance and adoption,” Professor Gillespie says.

"Yet our research reveals that 78% of Australians are concerned about a range of negative outcomes from the use of AI systems, and 37% have personally experienced or observed negative outcomes ranging from inaccuracy, misinformation and manipulation, deskilling, and loss of privacy or IP.”