'Lack of AI adoption can pose increased security risks, particularly if employees are using unregulated tools'

The popularity of artificial intelligence technologies spreads far beyond the common workers – and this could pose a risk to organizations, according to a report.

Overall, nearly half (47%) of Canadian employees admitted to using unregulated AI tools.

Additionally, over a third (33%) of these unregulated users engage with AI tools weekly, signaling a strong demand for accessible organization-approved AI solutions, says CDW.

However, over a third of mid-level (38%) and senior/executive-level (37%) employees also use AI tools that are not officially approved by their workplace, compared to only 23% of entry-level employees.

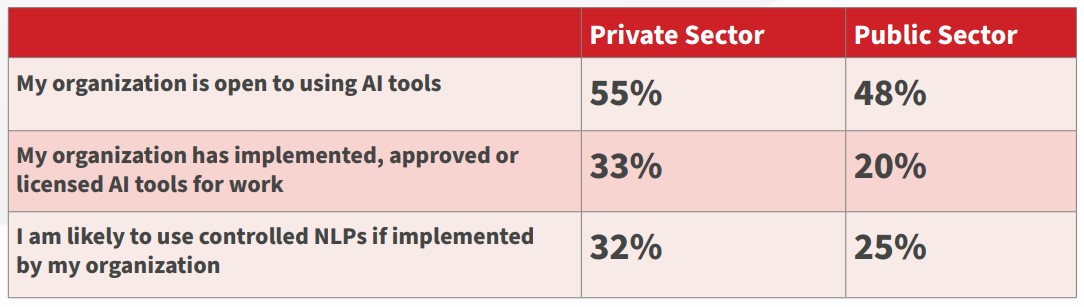

Source: CDW

“The high volume of unregulated GenAI users in Canada is concerning, as using public versions of these tools without the proper training poses significant security and reputational risks for organizations,” says CDW in the report titled Uncharted Innovation: The Rise of Unofficial AI Tool Usage Among Canadian Office Workers.

“This is exemplified in how employees are teaching themselves to use unregulated tools. Most are learning how to use unregulated AI tools through trial and error (73%) or informal methods like social media (25 percent) and online forums or communities (23%), rather than structured training programs. This lack of formal education increases the risk of mishandling data and misusing tools.”

Policies surrounding the use of AI appear to be falling behind the growing use of the emerging technology among HR professionals, according to a previous report.

Over six in 10 (61%) Canadian employees feel most comfortable about AI use in the workplace when tools have been implemented by their organization compared to 43 percent of those in organizations without approved AI tools, according to CDW’s survey of 1,000 office workers from Sept. 23 to Oct. 1, 2024.

However, 75% of those who use unapproved AI tools at work use public natural language processing (NLP) and interaction tools like ChatGPT, Claude and Google Gemini. Also, 73% of employees are learning how to use unregulated AI tools through trial and error, or informal methods like social media (25%) and online forums or communities (23%), rather than structured training programs.

This lack of formal education increases the risk of mishandling data and misusing tools, and a top-down approach to GenAI is crucial, according to CDW.

“As AI becomes embedded in society and the workplace, employers must be prepared to provide the necessary framework to guide responsible use,” says Brian Matthews, head of services, Digital Workspace at CDW Canada. “Organizations that don’t prioritize formal AI adoption risk being left behind. Lack of AI adoption can also pose increased security risks, particularly if employees are using unregulated tools.”

Recently, the federal government has launched the Canadian Artificial Intelligence Safety Institute (CAISI) to bolster Canada’s capacity to address AI safety risks.