Findings show that Meta's advertising algorithm for Facebook ads has discriminatory effect

A European human rights body has ruled that Meta, Facebook's parent company, engaged in gender discrimination on its job advertisements' algorithm.

The Netherlands Institute for Human Rights found that Meta uses an advertising algorithm that is reinforcing gender stereotypes by showing "typically female professions" to women and vice versa.

"The Board therefore rules that the defendant made prohibited discrimination on the grounds of gender when showing advertisements for vacancies to Facebook users in the Netherlands," read the ruling, as translated from Dutch.

The human rights body has ordered Meta to change its advertising algorithm to prevent further discrimination.

The order is a response to a complaint filed by Bureau Clara Wichmann, a women's rights organisation, in collaboration with investigative campaigning organisation Global Witness.

"Today is a great day for Dutch Facebook users, who have an accessible mechanism to hold multinational tech companies such as Meta accountable and ensure the rights they enjoy offline are upheld in the digital space," said Berty Bannor, staff member at Bureau Clara Wichmann, in a statement.

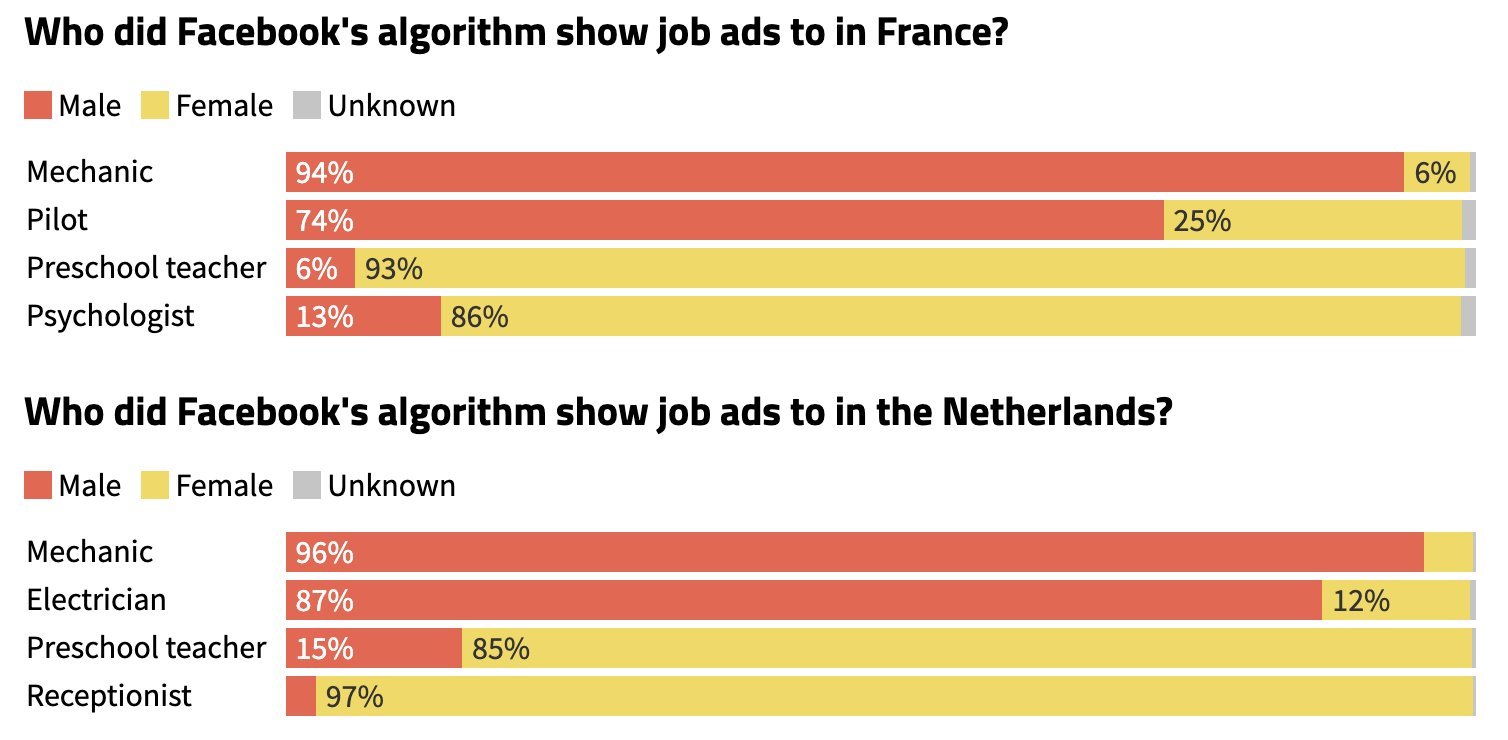

Global Witness in 2023 released research revealing that Facebook is "showing adverts for job vacancies overwhelming to one gender relative to another."

It found that the ads it posted for mechanic vacancies in the United Kingdom, the Netherlands, France, India, Ireland, and South Africa were shown mainly to men (91%). On the other hand, ads for pre-school vacancies were shown to an audience consisting mainly of women (79%).

Source: Global Witness

"We are concerned that in showing job ads predominantly to one gender the company's ad delivery algorithm is not just replicating, but exacerbating the biases we see in society, narrowing opportunities for users, and frustrating progress and equity in the workplace and society at large," Global Witness said in its report in 2023.

The practice is unlawful based on existing anti-discrimination legislation in the Netherlands and Europe, which prohibit distinction based on gender when displaying job adverts, according to Global Witness.

Rosie Sharpe, Senior Campaigner on Digital Threats at Global Witness, said they hope the recent ruling from the Netherlands Institute for Human Rights can be a "springboard for further action" elsewhere.

"This ruling marks an important step towards holding Big Tech accountable for how they design their services and the discriminatory impact their algorithms can have on people," Sharpe said in a statement.

A Meta spokesperson said it would not be commenting on the matter, CNN reported.

However, Meta spokesperson Ashley Settle previously told the news outlet that the tech giant employs "targeting restrictions to advertisers when setting up campaigns for employment, as well as housing and credit ads."

"We do not allow advertisers to target these ads based on gender," Settle told CNN in 2023. "We continue to work with stakeholders and experts across academia, human rights groups, and other disciplines on the best ways to study and address algorithmic fairness."

The ruling comes as the tech giant also recently announced changes to its diversity, equity, and inclusion (DEI) goals amid shifting legal landscape surrounding such measures in the United States.

Meta previously told employees in a memo that it is eliminating its DEI team, ending its equity and inclusion programmes, and will be changing hiring and supplier diversity practices.

Meta's case comes on the heels of previous warnings that algorithms may reinforce discrimination in workplaces.

A paper from Sophie Kniepkamp, Florian Pethig, and Julia Kroenung in The Gender Policy Report previously warned jobseekers and employers that algorithms may reinforce discrimination.

"It is important to create awareness about how algorithms show the same biases as humans in decision-making processes," the paper read.

"As AI becomes further integrated into workplace hiring, this may exacerbate gender bias in employment. Victims of discrimination will have no way of pointing their finger at someone who has committed a misdeed since algorithms cannot be held accountable or brought to justice for bias."

The use of algorithms in hiring is already among the subject matter priorities of the US Equal Employment Opportunity Commission (EEOC).

According to the EEOC, it will focus on recruitment and hiring practices and policies that discriminate on any basis that are unlawful under its statutes.

"These include the use of technology, including artificial intelligence and machine learning, to target job advertisements, recruit applicants, or make or assist in hiring decisions where such systems intentionally exclude or adversely impact protected groups," it said on its Strategic Enforcement Plan.

Hacking HR, a human resources services organisation, advised organisations last year to vet any AI software before implementing it to ensure fairness.

"The prevalence of AI bias has led some AI algorithms to begin implementing fairness-aware algorithms, which analyse data with consideration for potential areas of discrimination or bias," it said on LinkedIn.

Its other advice to avoid AI hiring biases include: