'In the years ahead, it'll become more critical for organisations to both identify and address deepfake risks,' says expert

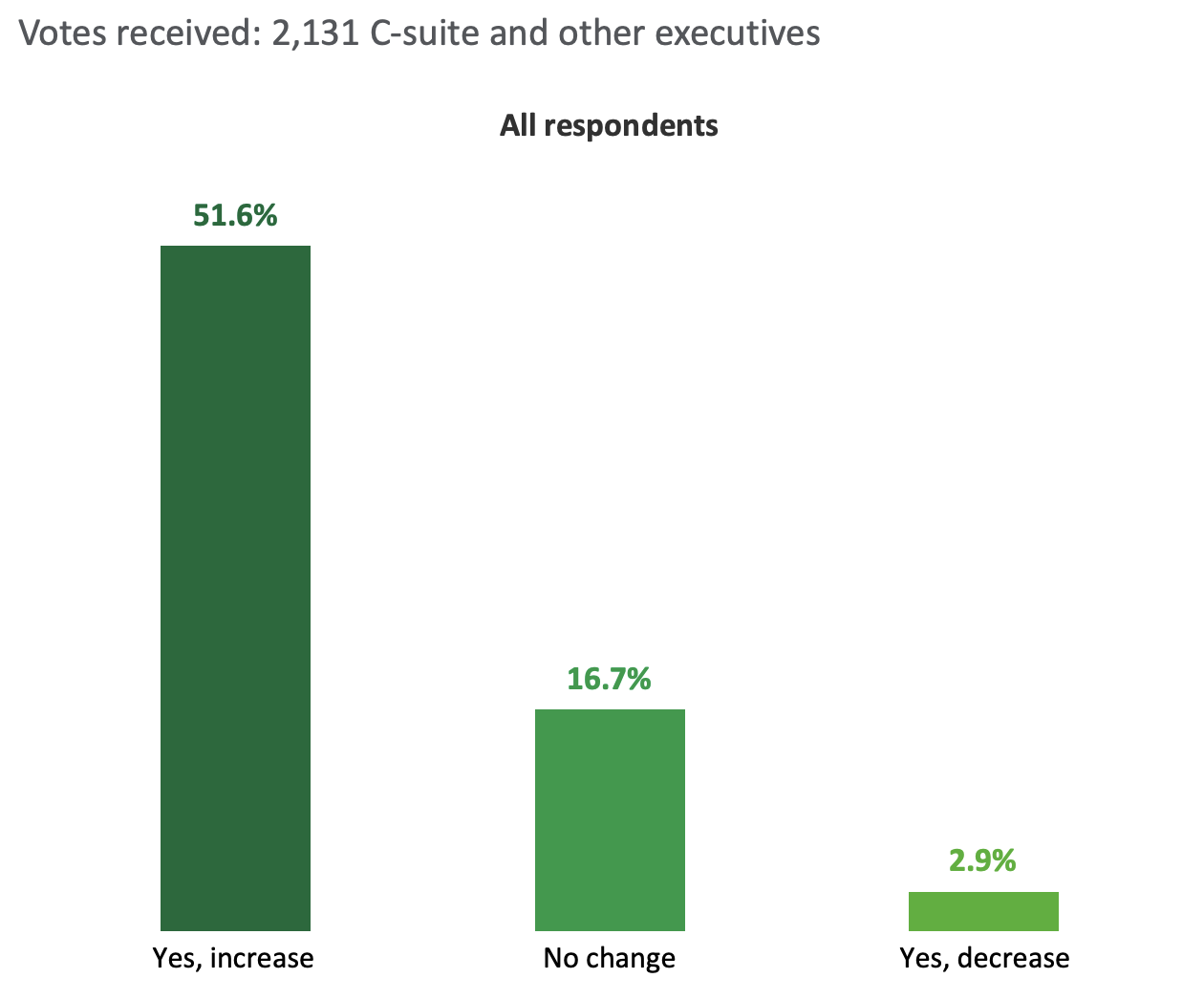

More than half of executives are expecting an increase in deepfake financial fraud cases in the next 12 months, according to a new poll from Deloitte.

The survey, which polled over 1,000 C-suite members and executives, found that 51.6% are expecting an increase in the number and size of deepfake attacks targeting their organisations' financial and accounting data.

Another 2.9% are expecting a decrease, while 16.7% said there will be no changes in the next 12 months.

Source: Generative AI and the fight for trust

Deepfake financial fraud is a category of cybercrime that uses deepfake technology to defraud organisations or individuals of cash assets or cause financial losses, according to Deloitte.

A quarter of Deloitte's respondents said their organisations have experienced one deepfake incident (10.8%) or more (15.1%) in the past 12 months.

One of the most notable cases of deepfake fraud in 2024 happened to a multinational company in Hong Kong, which lost HK$200 million after an employee fell victim to a sophisticated deepfake video conference scam.

The clerk was duped into transferring the amount after participating in a video conference where other participants turned out to be AI-generated deepfake personas.

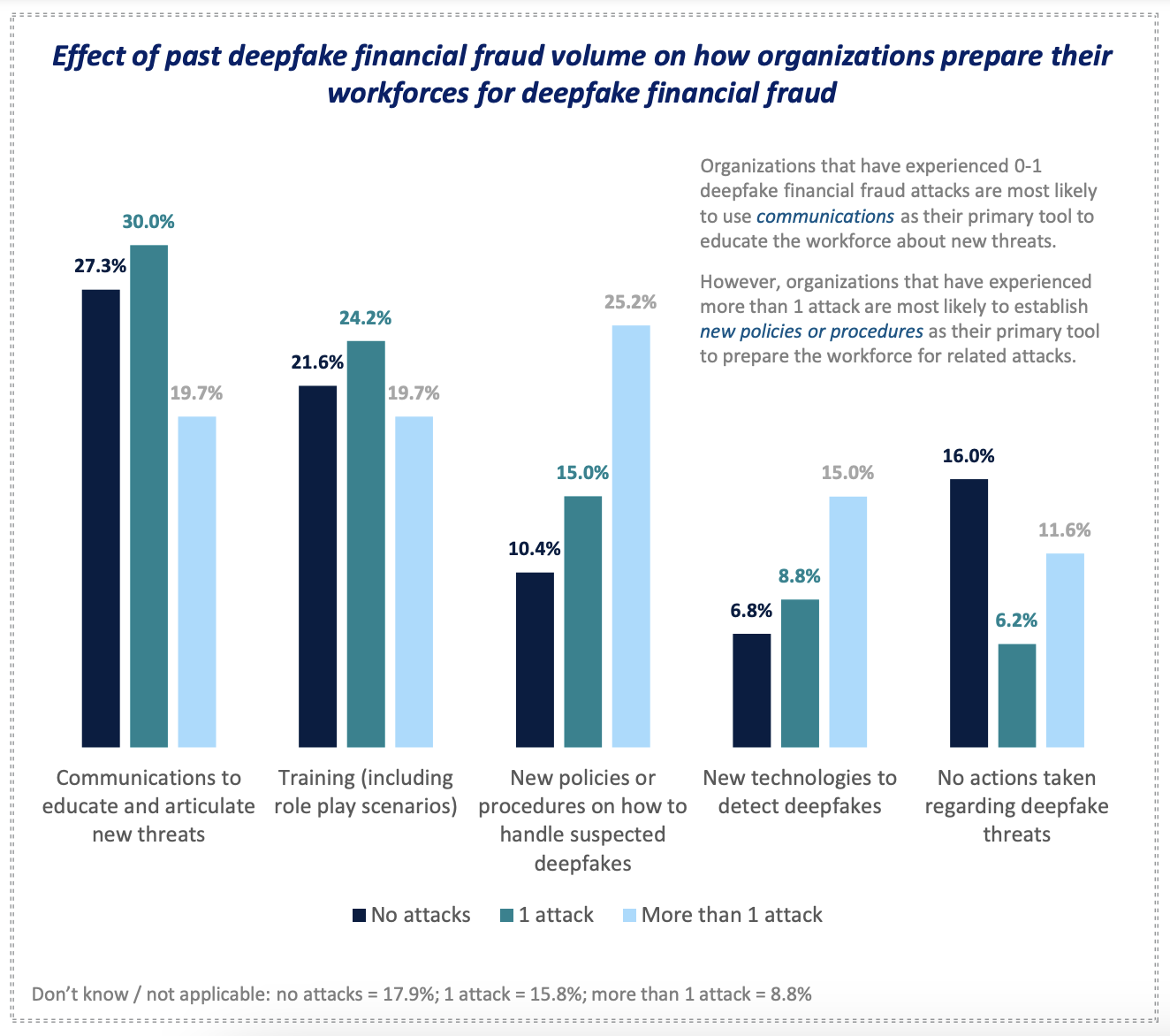

Mike Weil, digital forensics leader and a managing director, Deloitte Financial Advisory Services LLP, said the good news is how organisations respond after their first deepfake attack.

"The good news is that concern about future incidents seems to peak after the first attack, with subsequent events tempering concerns as organisations gain more experience and become better at detecting, managing and preventing fraudsters' deepfake schemes," Weil said in a statement.

According to the survey, a third of those who experienced one deepfake financial fraud attack prepare their workforce by communicating with them and educating them about new threats.

Nearly a quarter said they train employees, including role-play scenarios.

Source: Generative AI and the fight for trust

The findings come as a previous poll from Trustmi revealed that AI-driven attacks, such as deepfake and executive impersonation, have been "quickly gaining momentum" as of late.

"Deepfake financial fraud is rising, with bad actors increasingly leveraging illicit synthetic information like falsified invoices and customer service interactions to access sensitive financial data and even manipulate organisations' AI models to wreak havoc on financial reports," Weil said.

"In the years ahead, it'll become more critical for organisations to both identify and address deepfake risks, not the least of which will be those efforts targeting potentially market-moving accounting and financial data to perpetrate fraud."